Perhaps you’ve heard the term “dead internet theory” floating around online in the past few months. The conspiracy theory suggests that most of the content we consume online is actually created by bots—maybe as a form of governmental or corporate control. While this exact idea is unrealistic, references to the theory have skyrocketed for a reason. Bot accounts and spam have swarmed X, formerly known as Twitter, and the site’s users have taken notice.

Obviously, X is not the only social media platform to suffer from bot problems, and such issues online originated years and years ago. However, a combination of Elon Musk’s policies and generative AI has elevated spam to reach a large audience of tired X users.

In many ways, X has fallen from grace since it was purchased by Musk for $44 billion in October 2022, and the overall degradation of the user experience stands out as one of the most prominent problems. Recently, accounts promoting explicit content have flooded the reply sections of posts, including ones that haven’t even gone viral. Making the best of an annoying situation, users have been quick to poke fun at these bots that loudly advertise their links.

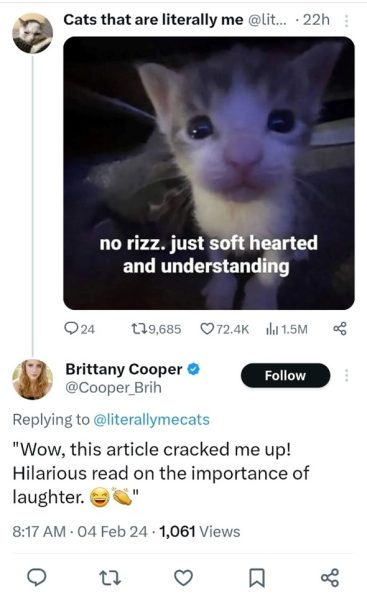

But the recent rise in those accounts isn’t the only issue. Accounts that clearly post AI-generated replies also infest X. Take a look at this “totally real” person’s nonsensical reply to a meme:

Elsewhere on the internet, AI-generated content has rudely made its presence known. We live in a bleak reality where there is furniture on Amazon named “I cannot fulfill this request as it goes against OpenAI use policy.” So, if spam and AI-generated slop are already so pervasive online, then why is the issue so noticeable on X?

The X verification system plays a significant part in making the problem so pronounced. Shortly after Musk took over at Twitter in late 2022, the site had a disastrous unveiling of its new system for giving out coveted blue checkmarks. While some changes have been made over time, the core concept is the same: users can pay for verification.

When the system was originally introduced, Musk claimed that it would reduce the amount of spam accounts on the platform. In reality, it seems to have had the opposite effect. Since April 2023, X has favored verified accounts in replies, making sure that you see droves of spam accounts under viral posts before you see replies by real, unverified people. Through this X Premium system, verified accounts can gain ad revenue for “organic impressions,” which has likely incentivized accounts to post (or steal) viral content or spread inflammatory bait.

You might be familiar with this viral video of a diner in New York. It has recently blown up as a meme because it has been reposted to death, but its widespread circulation online has been going on for months (check the date on the above post). In fact, the original video is from August 2022, yet this X account was able to gain millions of views and hundreds of thousands of likes for content it didn’t add any value to.

Of course, reposting viral videos is a problem across all social media platforms, but there’s more! Just six minutes after the above post, another account left a very lengthy reply providing more information about this diner, and it’s near the top of the replies. (You may notice that this account does not appear to have a blue checkmark. X Premium users can hide their checkmarks while still having their replies elevated.)

It’s hard to believe any real person could type up this response in just six minutes. Maybe this account is just very passionate about luncheonettes, but a quick profile visit reveals a lot of content related to NFTs. This account is just one of many taking advantage of the verification system by either posting attention-garnering content or latching onto viral posts, then promoting cryptocurrency or something similar.

Even when there’s seemingly no money to be made, gibberish inexplicably finds a way on X. Check out this ad that took at least a little money to buy:

So why does this all matter? The spam you’ve seen so far in this article is relatively benign—annoying at its worst and unintentionally funny at its best.

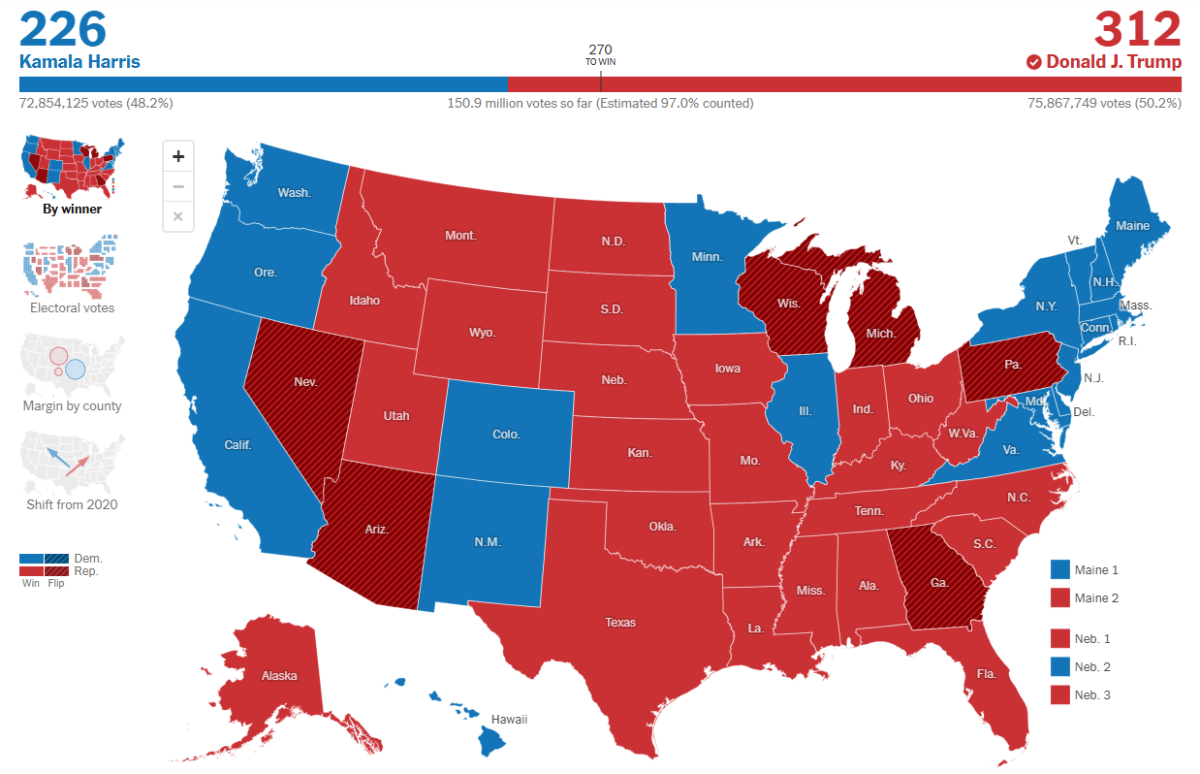

Social media, as unhelpful as it can sometimes be, remains an important tool for gathering and disseminating information. Before being purchased by Elon Musk, Twitter was the preferred social media for journalists, and even after the platform became less friendly to journalism, many reporters continued to use it for at least several months. While some bots are merely nuisances, others can be weaponized for widespread misinformation campaigns. This is not a new issue on social media, but the rise of generative AI has made misinformation an even larger threat than it was before.

No one wants to live in a world where the dead internet theory is reality, yet parts of it feel increasingly real every day. As the paid verification system on X has already shown, monetary barriers won’t stop bots from flooding social media. And putting harsher restrictions—such as a subscription fee for all users—would drive real people off of X, even if bot numbers might be cut down.

Bots and spam will always be difficult to fight, and it’s not solely the fault of X’s policies that there are so many bots on the platform. Still, continuing to allow them to promote themselves so invasively probably isn’t the best idea, especially after considering the threat of more serious bot problems.